AI threat is real (Image: Getty, Digital Domain/20th Century Fox/Kobal/REX/Shutterstock)

Children should be taught about the risks of artificial intelligence from the moment they get their first mobile phone because the robot age is upon us, experts say. Although Terminator-style killer cyborgs remain – for now – on the pages of science fiction books, technology is already sufficiently advanced to impact all areas of our lives.

The line between truth and disinformation is becoming increasingly hard to see (Image: Getty)

The speed of the breakthroughs has even shocked tech leaders, with more than 1,000, including Elon Musk and Apple co-founder Steve Wozniak, urging companies to pause further research.

However, China’s determination to plough on – driven partly by its ageing demographic – leaves the West with a headache.

AI already exists and is used in a plethora of daily applications, from deciding which adverts to show you to which emails should be blocked from your inbox.

But Open AI’s ChatGPT, which allows users to have a human-type conversation with a machine, has caused alarm with its power.

It was even able to pass exams, causing some schools and universities to re-visit their marking process.

AI has already caused a series of difficult incidents.

Last month one chatbot told a man to leave his wife. It took less than two hours of “conversation” with the Bing Chatbot for US journalist Kevin Roose to become “deeply unsettled”, when his computer told him: “Actually, you’re not happily married. Your spouse and you don’t love each other. You just had a boring Valentine’s Day dinner together.”

Dead cop is rebuilt as a violent law enforcing robot (Image: Getty)

George Washington University law professor Jonathan Turley was astonished to be told by colleagues he had been included in an AI-generated list of academics involved in sex scandals. The source turned out to be a fake 2018 Washington Post article which falsely claimed Prof Turley had sexually assaulted students during a trip to Alaska.

And last week it emerged that a woman had received a call from a voice which she recognised as her son’s, claiming to have had an accident.

He asked for money for police bail. But when she phoned her son back she discovered it wasn’t him who had made the call, but an AI-created imitation of his voice.

The EU has now introduced new legislation, Italy has become the first government to ban ChatGPT due to data privacy concerns and the UK Government has rolled out a white paper laying out a “pro-innovation approach”.

But with China unlikely to heed calls for caution, there is little chance of the brakes being applied.

A look at China’s AI developments highlights the Chinese Communist Party’s main concern: the control of its people.

According to a recent US Government report, the world’s top five most accurate developers of facial recognition – the mainstay of China’s ubiquitous CCTV network – are now Chinese.

And its demographic time bomb – 43 percent of the population will be drawing pensions by 2050 – has led to massive AI innovations for the robotics sector. China has already surpassed the US for the first time to reach a level of 322 robots per 10,000 people.

A Space Odyssey (Image: )

AI depends heavily on data, and this is why there is an ongoing controversy surrounding the Chinese social media app Tik Tok.

“AI is fundamentally a technology for prediction and autocratic governments would like to be able to predict the whereabouts, thoughts, and behaviours of citizens,” said Prof David Yeng of Harvard University, recently,

If China successfully exports its technology, it could “generate a spreading of similar autocratic regimes to the rest of the world.”

Prof Cai Hengjin, of the AI Research Institute at China’s Wuhan University, said: “One measurement is how fast and powerful AI would grow beyond our imagination.

“Some thought it would grow slowly and we still have decades or even hundreds of years left – but that’s not the case. We only have a couple of years – because our AI advancement is just too fast.”

Expert Prof Mark Lee, from Birmingham University, said while killer robots may be decades away, we should be more concerned about the here and now.

The tech race, he believes, is unstoppable – meaning we should prepare for a new age.

“I am worried we will spend the next few years worrying about killer robots when the real danger is fake news – and it is with us now,” said Prof Lee.

Obvious incarnations of Ai technology include deep fakes – computer-generated photos and videos which use face recognition technology to replicate a person’s face or body. Recent examples include the image allegedly showing Pope Francis looking wearing a white puffer jacket, or Donald Trump being arrested by police.

AI allows scammers to replicate the voice of loved ones and launch fake calls (Image: Getty)

Just after Russia’s invasion of Ukraine last year, the Kremlin showed how this war would be different by generating a deep fake video purporting to show Ukrainian President Volodymyr Zelensky ordering his troops to surrender.

The same technology now allows scammers to replicate the voice of loved ones and launch fake calls claiming there is one emergency and asking for money. All it takes is a few seconds of video to generate a near-perfect match of someone’s voice, thoughts, of course, scammers are limited by the information they have with which to fend off questions.

Then there are the less obvious applications.

Right now, Russia employs hundreds of people in its troll farm in St Petersburg to push out disinformation. This isn’t new: studies have shown how, during the 2014 Scottish Independence referendum, the 2016 Brexit referendum and general elections here and in the US, Russian trollers, intent merely on deepening social divisions, took both sides of the argument and expanded it. Since February last year, these trolls have also been aiming at India and South Africa where condemnation of the invasions is not as high as in other nations.

“It takes effort and resources to employ banks of trolls – but imagine when autocratic governments are able to do this using AI,“ said Prof Lee.

And the problem won’t be limited to rogue states.

Access to increasingly cheap to use Ai threatens to create a deluge of automated media, from shock jocks and single-issue campaigners to larger broadcasters,

AI (Image: David James/REX/Shutterstock)

Just last week UK firm Tovie AI announced an app which can turn text-based news articles and delivering a life-like conversation between different audio avatar personas “within minutes”.

“This solution creates a space where both human experts and virtual hosts can interact, discuss articles, conduct interactive polls, and host various shows on any given topic, 24/7,“ says the firm.

Solutions aren’t easy. While Western nations may well decide to pass laws that watermark Ai-generated material, what about fake material posted by rogue states who do not play by the same rules?

A growing scepticism about who or what to trust will find people taking refuge with those who agree with them, further amplifying the echo chamber culture which is already pervasive across social media, said Prof Lee.

He added: “Different chatbots with different agendas will find you and match your beliefs and back them. People will no longer be talking to each other; they won’t be escaping views, they will be agreeing with chatbots.”

Ultimately, neither east nor west are giving sufficient thought to the consequences of these developments.

“There is no real sense that these multinationals know where they’re going with these developments as they race against each other to secure the next hit.” said Prof Lee.

“Since it cannot be stopped, the only answer is education.

“We have to teach people how to evaluate information, question information.

“It will require education in critical rethinking and scientific method and it will have to begin as soon as you give your kids their first mobile phone and they encounter AI for the first time.”

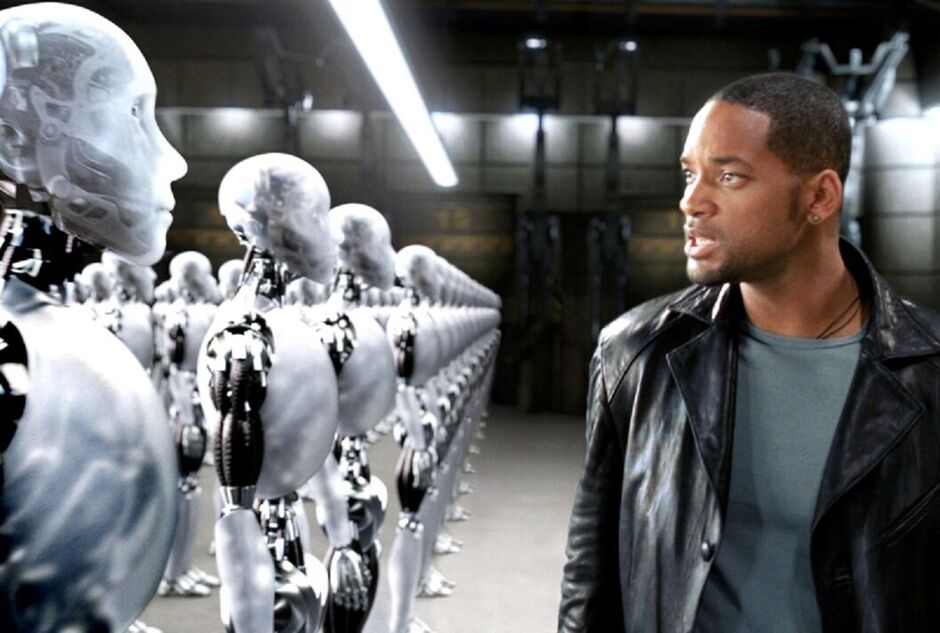

Will Smith in I, Robot (Image: Digital Domain/20th Century Fox/Kobal/REX/Shutterstock)

Stranger than fiction?

2001: A Space Odyssey (1968)

Based on a series of stories by Arthur C Clarke, Stanley Kubrick’s classic film tracks a manned mission to Jupiter – oveseen by sentient computer HAL. The computer, remembered for its emotionless monotone, controls most of the spacecraft – but then attempts to kill the crew.

Star Wars (1977)

The George Lucas classic featured iconic robots C3P0 and R2D2. Both appear capable of human feelings and each plays a key part throughout the series

Blade Runner (1982)

Ridley Scott’s sci-fi classic, based on a Philip K Dick novel, is based in a dystopian Los Angeles in 2019. Replicants – bioengineered androids – have been banned after going rogue. Replicants have false memories and even believe themselves to be human, so Harrison Ford is sent in to clean up the mess.

The Terminator (1984):

The cyborg of the title, played by Arnold Schwarzenegger, has been sent back in time from 2029 on a mission to kill Sarah Connor before she becomes a mother. In the film’s future world, artificial intelligence company SkyNet has gained self awareness and triggers a nuclear war. Sarah’s son leads the resistance against the machine in an uncertain future.

AI: Artificial Intelligence (2001)

Originally a Stanely Kubrick project, this was passed to Steven Spielberg and features advanced humanoids, called Mechas. One prototype, David, is capable of love. He is abandoned when viewed as a danger and yearns to become a real boy

I, Robot (2004):

Based on a short story collection by Isaac Asimov, humanoid machines are advanced enough to take key roles in civic society. But Will Smith is called in to investigate after a suspicious suicide triggers concerns that the machines have turned against their makers.

M3GHAN (2022):

This science fiction film has an artificially intelligent doll who is paired with Cady, a nine-year-old orphan. Although they initially bond M3GAN becomes self aware with deadly consequences for anyone who steps between her and her human companion.

AI has already caused a series of difficult incidents (Image: Getty)

Social manipulation

The line between truth and disinformation is becoming increasingly hard to see. This will lead to greater social divisions as people gravitate towards echo chambers – which might themselves be powered by sophisticated AI chatbots.

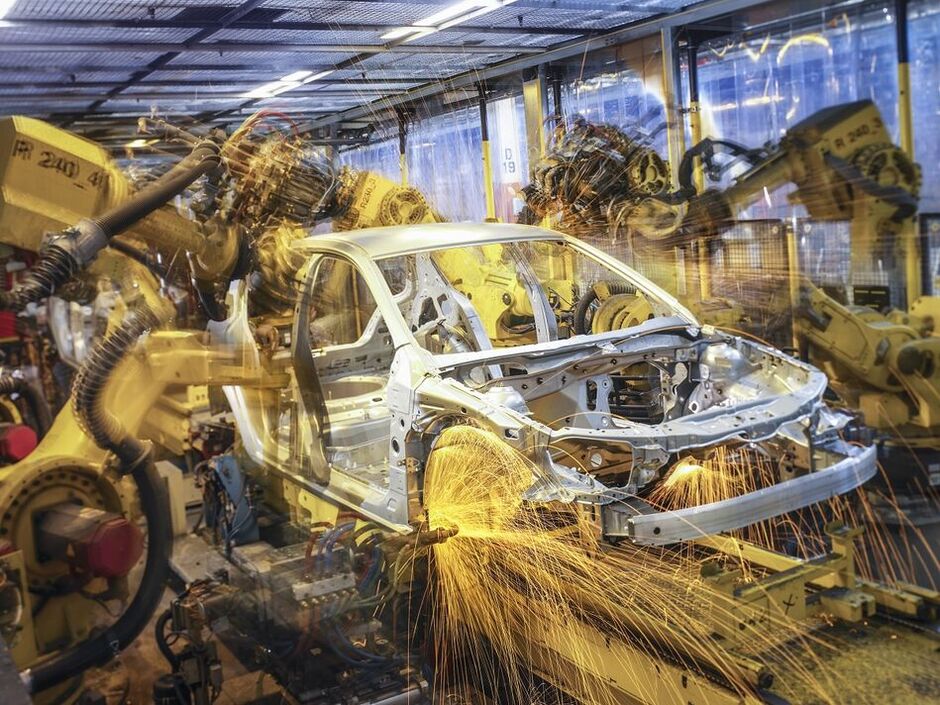

Job losses due to AI automation

85 million jobs are expected to be lost to automation by 2025. While AI will also create 97 million new jobs by then, many employees won’t have the skills needed for these technical roles.

Autonomous weapons powered by AI

“The key question for humanity today is whether to start a global AI arms race or to prevent it from starting,” said an open letter of 30,000 AI experts in 2016.

“If any major military power pushes ahead with AI weapon development, a global arms race is virtually inevitable, and the endpoint of this technological trajectory is obvious: autonomous weapons will become the Kalashnikovs of tomorrow.”

Social surveillance with AI technology

China’s use of facial recognition technology in offices and schools might allow the government to gather enough data to monitor a person’s activities, relationships and political views. The technology will be used by other rogue states.